- AI Optimization

- Digital Marketing

- Search Engine Optimization

- Updated 09/04/2025

How to rank in ChatGPT: How LLMs Decide What Products to Recommend (And What You Can Learn From It)

Buyers are already asking ChatGPT and other large language models (LLMs) for product and vendor recommendations. Not tomorrow. Not next year. Now.

While there is some speculation about how LLMs make recommendations, most research to date has focused on gaining mentions and citations rather than getting your brand recommended when someone is searching for a solution. The practice of optimizing for AI mentions is called Generative Engine Optimization or GEO for short.

I conducted a real-world experiment to understand how large language models (LLMs) decide what to recommend. I asked ChatGPT for a series of prompts about one specific type of buying decision: PLM (Product Lifecycle Management) software for industrial and mid-market manufacturers.

In this post, I’ll walk you through what I found, how the recommendations changed based on the prompt, and what signals seem to matter most for getting recommended. If you’re a marketer or business leader wondering how to appear in LLM answers, this will provide a more straightforward path forward for your Generative Engine Optimization (GEO) efforts.

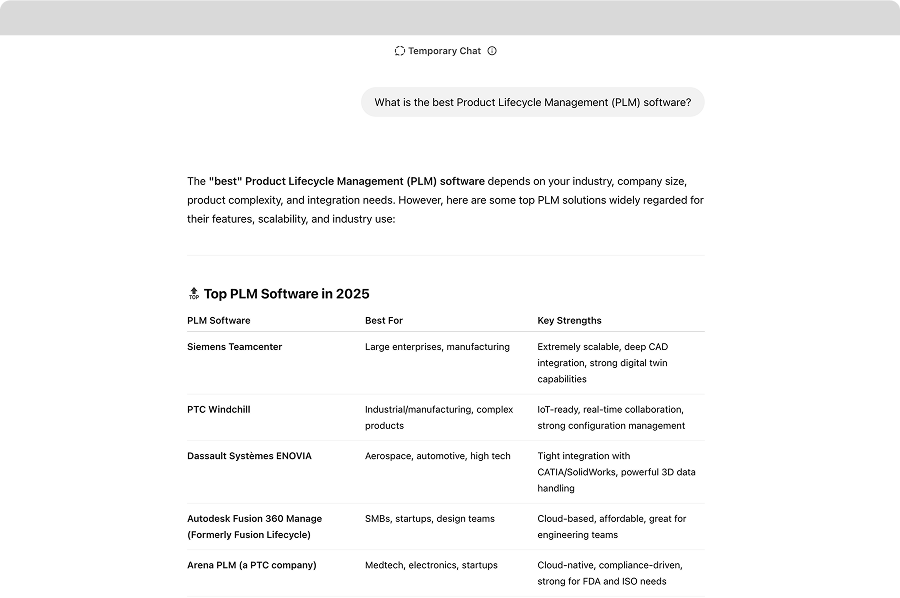

Starting Broad

I wanted to start very broadly to gain insights into the breadth of factors that LLMs might consider when recommending B2B software solutions.

I began with simple, high-level prompts like:

- “What is the best PLM software?”

- “Who are the top PLM providers?”

ChatGPT responded with a list of well-known names:

- Siemens Teamcenter

- PTC Windchill

- Dassault ENOVIA

- Autodesk Fusion 360 Manage

- Arena PLM

- Oracle Agile PLM

- SAP PLM

When I asked ChatGPT why it recommended these options, it explained that the recommendations were made on broad brand authority, market share, and analyst validation.

Logically, this makes sense. When faced with a broad recommendation, the LLM identified the safest, most widely adoptable solutions. Simply put, any of the recommended solutions would be safe for most PLM needs.

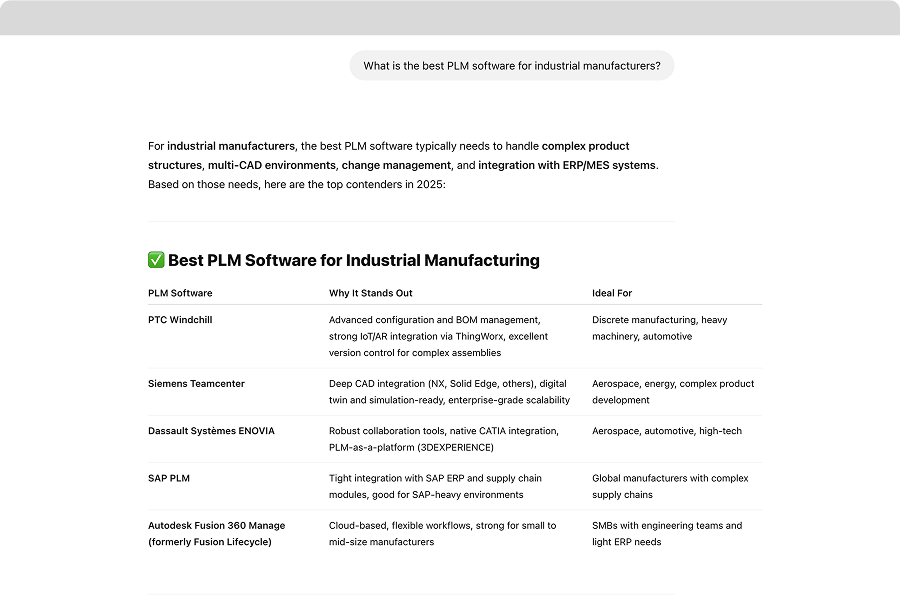

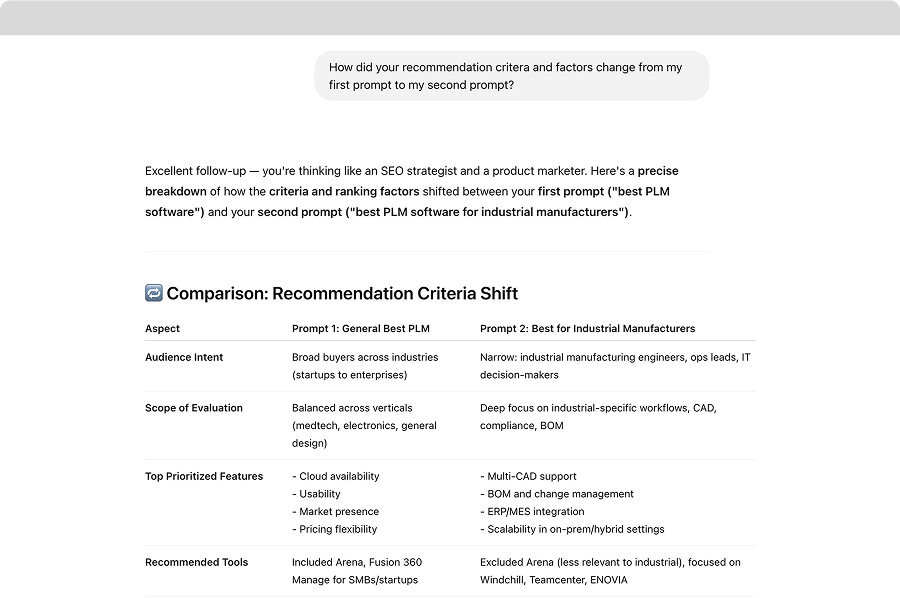

Step 2: Add Industry Context

Similar to search behavior, motivated buyers will likely search with more specifics and detail to ensure what they find fits their exact needs. To explore this further, I added additional industry context.

I asked:

- “What is the best PLM software for industrial manufacturers?”

The list changed. While many of the same players appeared, the reasoning became more specific.

With this list, ChatGPT focused on:

- Deep CAD/ERP integration

- Multi-level BOM management

- Support for complex engineering workflows

- Fit for heavy industry and capital equipment

With the broad query, the LLM looked for feature-rich, well-established solutions. With the industry focus, it looked at specific capabilities and use cases such as integration with Siemens NX, compatibility with shop-floor systems, and digital twin support.

The recommendations favored platforms designed for large-scale, global industrial operations.

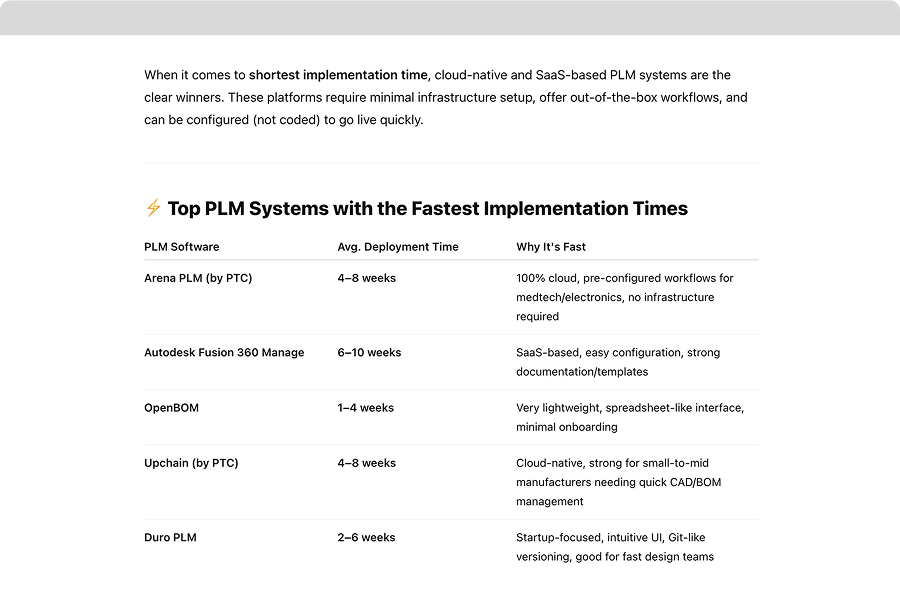

Step 3: Add Company Size and Buying Constraints

I added details and buying criteria to better replicate a “real-world” scenario. Often, factors such as company size, implementation time, post-launch support, and others significantly influence a business’s decision to select B2B software.

To narrow in, I asked:

- “What PLM software is best for companies with fewer than 500 employees?”

- “What PLM has the shortest implementation time?”

This time, ChatGPT’s recommendations shifted dramatically. Brand behemoths like Siemens, Oracle, and SAP dropped off, and tools like Arena PLM, Autodesk Fusion 360 Manage, and OpenBOM moved to the top.

ChatGPT reported it’s updated signals included:

- Cloud-native architecture

- No IT team is required

- Out-of-the-box workflows

- Fast deployment (2–8 weeks)

- Clear pricing models

- Simple onboarding and UI

In short: the recommendations matched buyer constraints. Smaller teams with limited resources were guided to leaner, SaaS-first tools.

Step 4: Ask Why?

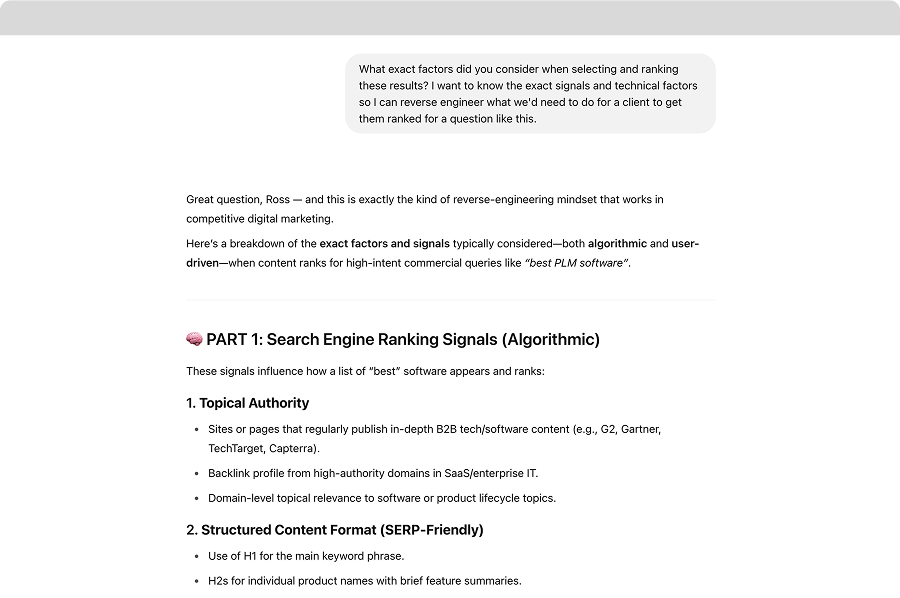

While I asked ChatGPT the reasoning behind each set of recommendations along the way, I followed up by asking it to summarize and clarify all of the responses and rankings as a whole to better understand not just how the rankings changed based on the specific query but also the overall system the LLM uses to determine the rankings based on the query.

I asked:

- “Why did you recommend these vendors?”

- “What signals were you using to make these rankings?”

Based on what I’ve seen thus far, the signals used to rank and recommend solutions are highly contextual. Every query will likely consider different factors. However, we can identify trends and commonalities based on the type of solution someone is looking for or the problem they’re hoping to solve.

In the case of PLM software, ChatGPT uses two types of signals:

1. Functional Signals (product capabilities)

- CAD integration

- BOM complexity

- Regulatory compliance

- Cloud vs on-prem deployment

- ERP and MES compatibility

2. Non-Functional Signals (brand visibility and trust)

- Analyst reports (Gartner, Forrester)

- Peer reviews (G2, Capterra, Reddit)

- Fortune 500 client logos

- Public company status

- Ecosystem integrations (CAD, ERP, IoT)

- Case studies and customer proof

Even when the capabilities were similar, ChatGPT favored more widely adopted vendors, visible in analyst reports, and mentioned across trusted third-party platforms.

This matched what we’ve seen in other spaces: brand strength and third-party validation carry serious weight.

What This Means for Generative Engine Optimization (GEO)

If you want your product or service to appear in AI-driven recommendations, your strategy must reflect how LLMs “think.”

Here’s how:

1. Build visibility across third-party sources

It’s clear that LLMs heavily rely on brand mentions and citations. ChatGPT was pulling from sites like G2, Reddit, Capterra, and Gartner for software solutions. These sites might not be relevant to your industry, but it will be essential to have visibility on the sites that are.

If your brand isn’t showing up in these ecosystems, it likely won’t appear in the LLM’s answers.

2. Speak to specific use cases and buyer profiles

LLMs tailor their answers to prompts. You must understand your target audience, including how they frame their needs. Do they think they need a PLM designed for small manufacturers? Or are they more focused on the PLM that’s fastest to implement?

Once you’ve identified their thought process and decision criteria, your website must clearly communicate how your solution best fits their use case. Create dedicated pages, case studies, and messaging that reflect their needs.

If someone is looking for a PLM designed for small manufacturers, your website needs to tell the LLMs that your software is the right solution for them.

3. Cover both technical and strategic value

It’s clear LLMs look at feature/service fit. Does the solution achieve what you need? For example, suppose you need a new HubSpot website. In that case, the LLM will confirm that the marketing agency it’s considering offers web design and HubSpot websites before recommending it.

However, they also look at the broader fit. This means things like time-to-value, ecosystem fit, adoption ease, support, etc. These factors will change depending on the context and query, but they are likely to remain one of the criteria.

4. Strengthen your digital footprint

Much like a strong backlink profile, an extensive digital footprint increases the confidence of LLMs in recommending your brand. Get mentioned in analyst reports. Pitch journalists. Contribute to industry publications. Participate in Reddit threads. Answer Quora questions. Every mention is a signal LLMs will remember.

5. Write content that helps buyers make decisions

LLMs often echo content that compares, contrasts or evaluates. Publish decision-support content like comparison tables, feature maps, and industry-specific reviews.

LLM / AI Optimization Vs Traditional SEO

| Feature / Signal | Traditional SEO | LLM / AI Optimization |

|---|---|---|

| Primary data sources | Google, Bing, other search engines pulling from indexed web pages | LLMs trained on web content, third-party sites, reviews, directories, and social media |

| Trust signals | Backlinks (volume and domain authority) | Brand strength, citations in analyst reports, community mentions, third-party reviews |

| Content signals | Keywords in on-page content, title tags, meta descriptions | Clear answers, conversational tone, low reading level, decision-support content |

| Site structure signals | Clean markup, schema.org, internal linking, crawlability | Same technical foundation matters—structured data helps LLMs interpret content better |

| User experience signals | Page speed, mobile responsiveness, error rates | Same UX factors apply—LLMs favor easily accessible, high-quality sites |

| Coverage of use cases | Broad vs. long-tail keyword pages | Prompts can be broad or niche—content must address spectrum of needs and scenarios |

| Third-party validation | Guest posts, citations, earned media | Mentions in Reddit, Quora, G2, Capterra, Wikipedia—trusted by LLMs as signals |

| Review & rating signals | Star ratings in SERPs via Reviews Schema | LLMs draw from diverse review platforms; volume/consistency matter |

| Brand awareness | Branded search volume, homepage strength | LLMs weigh overall brand footprint; familiar names are safer recommendations |

| Comparative content | Comparison posts, “vs.” content | LLMs rely on decision-support pieces like pros/cons lists, use cases, and feature maps |

Final Takeaway

The way LLMs recommend products isn’t random, and it isn’t magic. It’s based on signals: content, context, and credibility. These broad signal groups are likely the dominant drivers of most LLM B2B recommendations.

If you want to earn a place in that one answer an LLM gives a future buyer, now is the time to make yourself more discoverable, more relevant, and more trusted.

Start working on your Generative Engine Optimization (GEO.) Get specific. Get visible. And start thinking the way the machine thinks. If you need help appearing in Large Language Models like ChatGPT, Claude, or Google Gemini, please schedule a free consultation.